How we fixed the AWS NAT Gateway issue

At Deriv, we have decided to become cloud-agnostic by moving a few of our services from AWS to GCP. The majority of our services and databases still run on the AWS infrastructure.

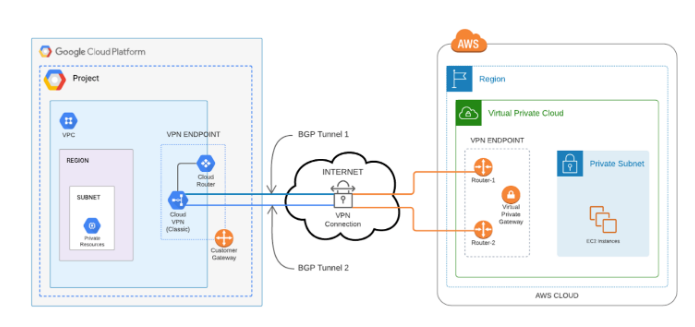

One of our main security concern was not to expose our services to the public network. Hence, we decided to handle the traffic via a private network by creating a VPN tunnel between GCP and AWS using the IPSec protocol to secure the communication channel.

Site-to-site VPN Connection:

The key concepts used on AWS to peer with GCP fall into two groups.

First, we have the key concepts for the site-to-site VPN used on AWS:

- VPN connection: A secure connection between other clouds and the VPCs on AWS

- VPN tunnel: An encrypted link where data can pass from the other network to or from AWS

- Customer gateway: An AWS resource which provides information to AWS about your gateway device. In our case, it's a GCP peer VPN gateway. GCP Cloud VPN gateways are associated with this gateway on AWS.

- Virtual private gateway: A virtual private gateway is the VPN endpoint on the Amazon side of your site-to-site VPN connection that can be attached to a single VPC.

And then, there are the key concepts used on the GCP end to peer with AWS:

- Cloud Router: Fully distributed and managed Google Cloud service to provide dynamic routing using BGP for your VPC networks.

- Cloud NAT: It's a Google service that lets certain resources without external IPs create outbound connections to the internet.

- Cloud VPN gateway: Google-managed VPN gateway running on Google Cloud. Each Cloud VPN gateway is a regional resource that has one or two interfaces. We used two interfaces and each with its own external IP addresses: interface 0 and 1. Each Cloud VPN gateway is connected to a peer VPN gateway. AWS Tunnels public IPs are associated with this Gateway.

- Peer VPN gateway: It’s a gateway connected to Cloud VPN gateway. All the Tunnels are GCP end using this gateway. Each tunnel should have one external IP.

- Cloud VPN tunnels: A VPN tunnel connects two VPN gateways(AWS and GCP) and serves as virtual tunnels through which encrypted traffic passes.

High availability of the tunnels:

High availability (HA) is a challenging and crucial aspect when building any critical infrastructure.

We set up our HA tunnels by using redundant site-to-site VPN connections and customer gateways. Both tunnels are active all the time and connectivity won’t be interrupted if any one tunnel goes down.

To achieve this, we have configured two interfaces for each cloud VPN gateway on our GCP cloud. So these two interfaces can be paired with two different customer gateways at AWS end. These two customer gateways are associated with the site-to-site VPN tunnels.

Challenges faced during the project live:

Our applications usually make 100+ API calls/sec and a lot of concurrent connections from GCP to the servers/services/databases hosted on AWS. We didn’t realise this would be a bottleneck.

The traffic was redirected to the GCP workloads by simply changing some DNS records on Cloudflare. But applications were working fine for some time, then the API queue would get piled up, and the applications would start slow-responding to the clients.

We have tried to troubleshoot by running a few scripts to check the connectivity, API call timing, etc. And finally, we found something related to some port limitations in the Google Cloud NAT.

We didn’t have enough time to extend our troubleshooting. Hence, we decided to rollback and test it well with the proposed fixes before giving it another try.

Improvements that resolved the bottlenecks:

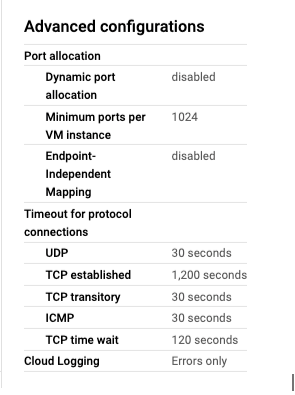

The root cause of the issue was a default setting of Cloud NAT on GCP. By default, a Minimum ports per VM instance value is 64. This means that it can establish only 64 connections to the same destination IP and port.

See how Cloud NAT gateways use IP addresses and ports in more detail.

Since our GCP servers are establishing 100+ connections to the servers hosted on AWS, connections were getting refused once they reached the default 64.

Finally, we decided to increase the limit of Minimum ports per VM instance to 1,024 which helped resolve the issue.

Now, our services are running on both AWS and GCP.